My research interests lie at the intersection of

intergroup cognition and

political psychology. I study how humans form and

perceive social groups (i.e., social categorization), how people think once these

groups are formed (e.g., group-based motivated cognition), and the behavioral

ramifications of group membership (e.g., intergroup violence). I am particularly

interested in the dual process by which representations of social groups both

structure and are structured by political belief systems. To investigate these

topics, I use methods from social psychology (e.g., survey experiments), cognitive

science (e.g., psychophysics), and computational social science (e.g., agent-based

modeling, network analysis).

Publications / Working Papers

Ghezae, I., Yang, F., & Yu, H. (2025). On the perception

of moral standing to blame. Open Mind.

Abstract

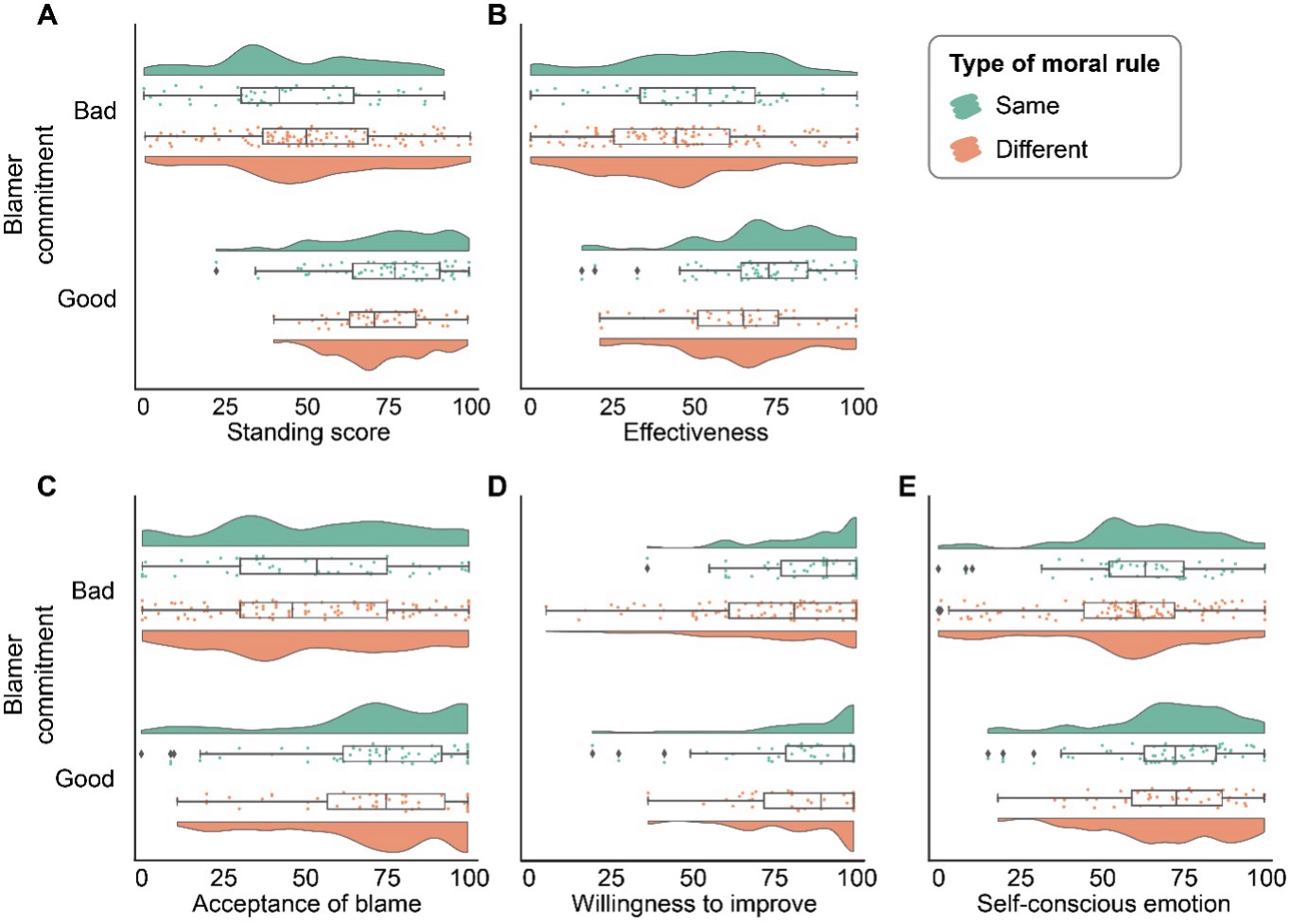

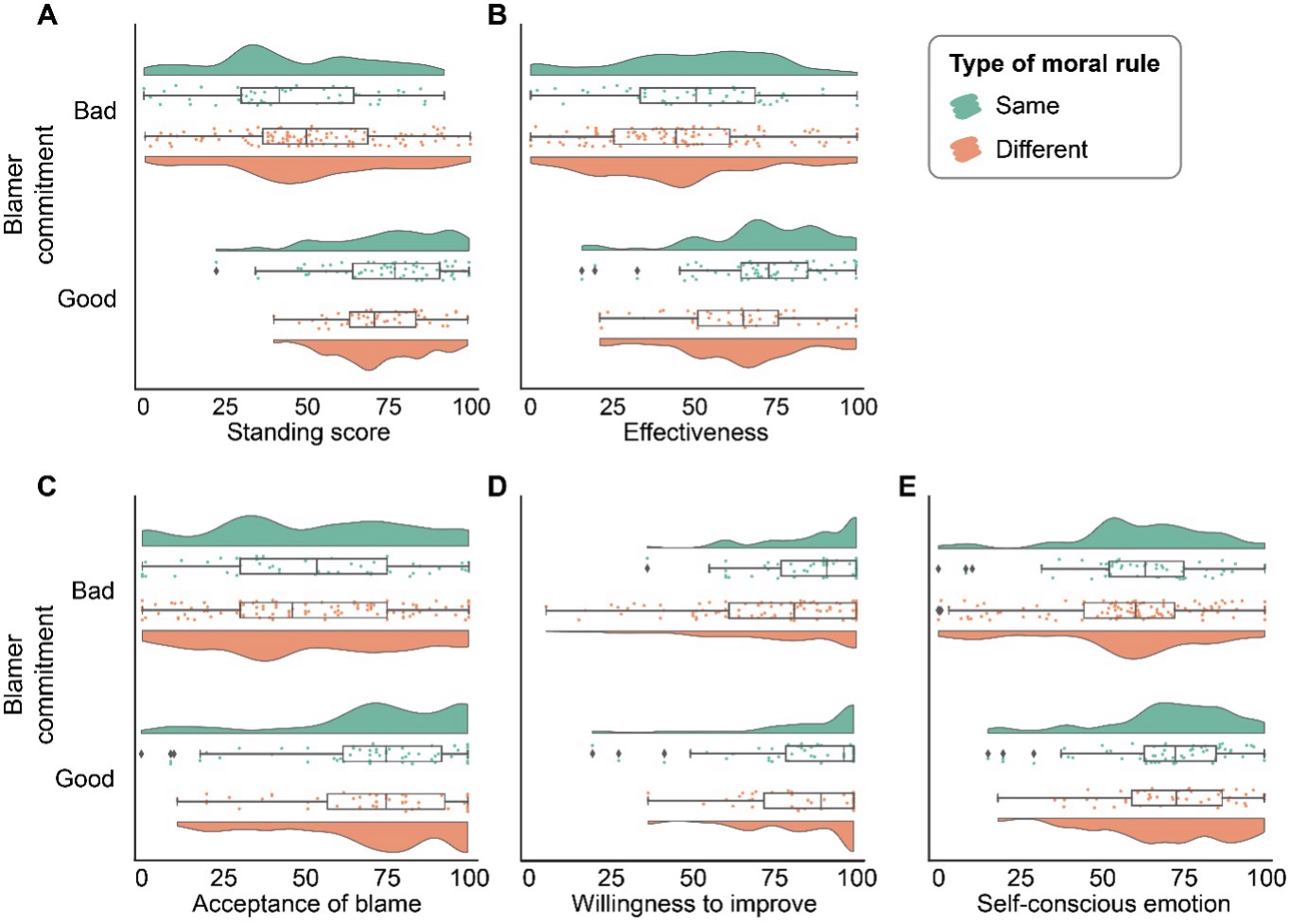

Is everyone equally justified in blaming another's moral transgression? Across

five studies (four pre-registered; total N = 1,316 American

participants), we investigated the perception of moral standing to

blame--the appropriateness and legitimacy for someone to blame a moral

wrongdoing. We propose and provide evidence for a moral commitment hypothesis--a

blamer is perceived to have low moral standing to blame a moral transgressor if

the blamer demonstrates weak commitment to that moral rule. As hypothesized, we

found that when blamers did not have the chance or relevant experience to

demonstrate good commitment to a moral rule, participants generally believed

that they had high moral standing to blame. However, when a blamer demonstrated

bad commitment to a moral rule in their past behaviors, participants

consistently granted the blamer low moral standing to blame. Low moral standing

to blame was generally associated with perceiving the blame to be less

effective and less likely to be accepted. Moreover, indirectly demonstrating

moral commitment, such as acknowledging one's past wrongdoing and

feeling/expressing guilt, modestly restored moral standing to blame. Our

studies demonstrate moral commitment as a key mechanism for determining moral

standing to blame and emphasize the importance of considering a blamer's moral

standing as a crucial factor in fully understanding the psychology of blame.

Ghezae, I.*, Jordan, J. J.*, Gainsburg, I. B., Mosleh, M.,

Pennycook, G., Willer, R., & Rand, D. G. (2024). Partisans neither expect nor

receive reputational rewards for sharing falsehoods over truth online.

PNAS Nexus.

Coverage:

Harvard Business School Working Knowledge

Abstract

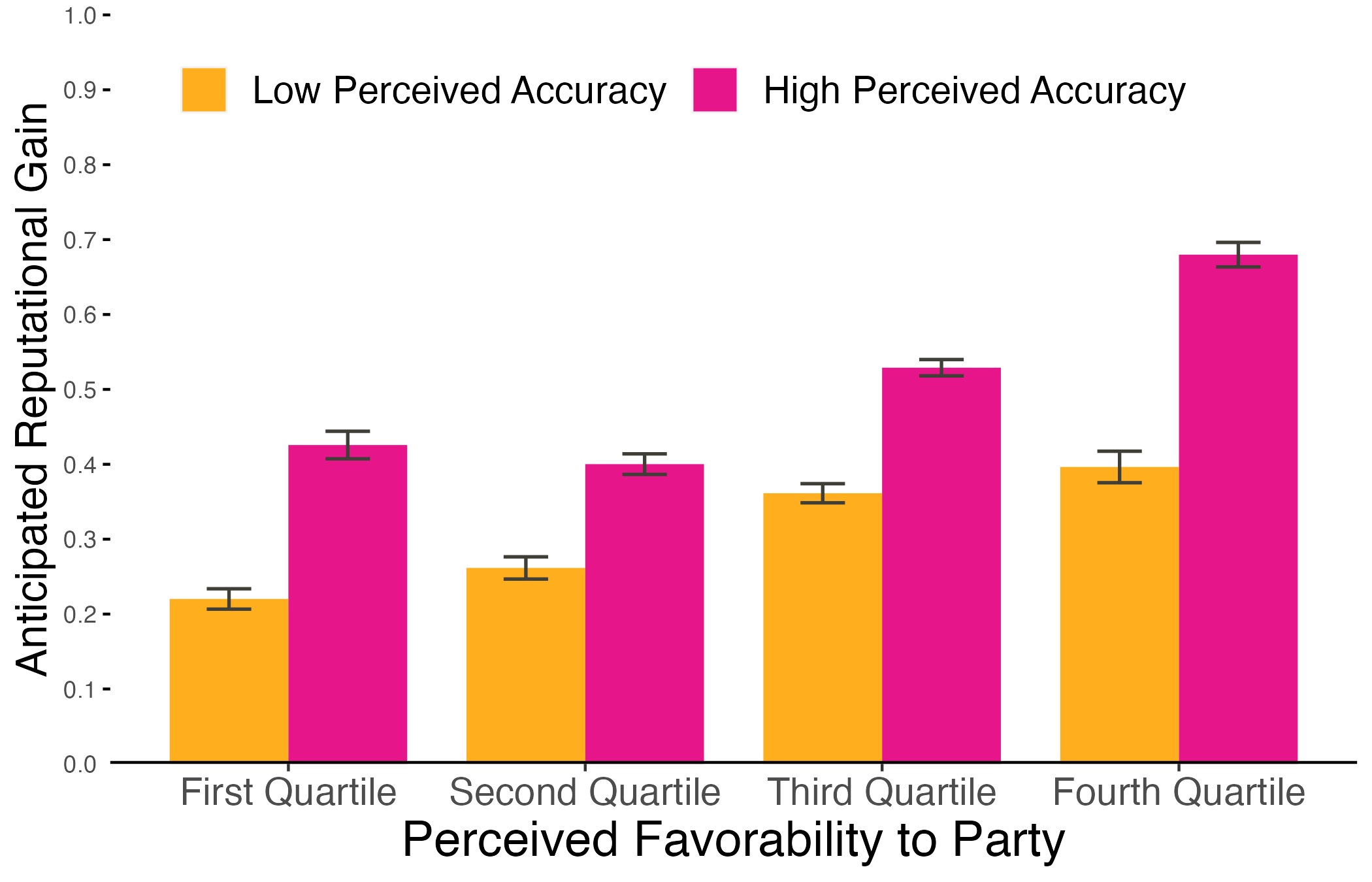

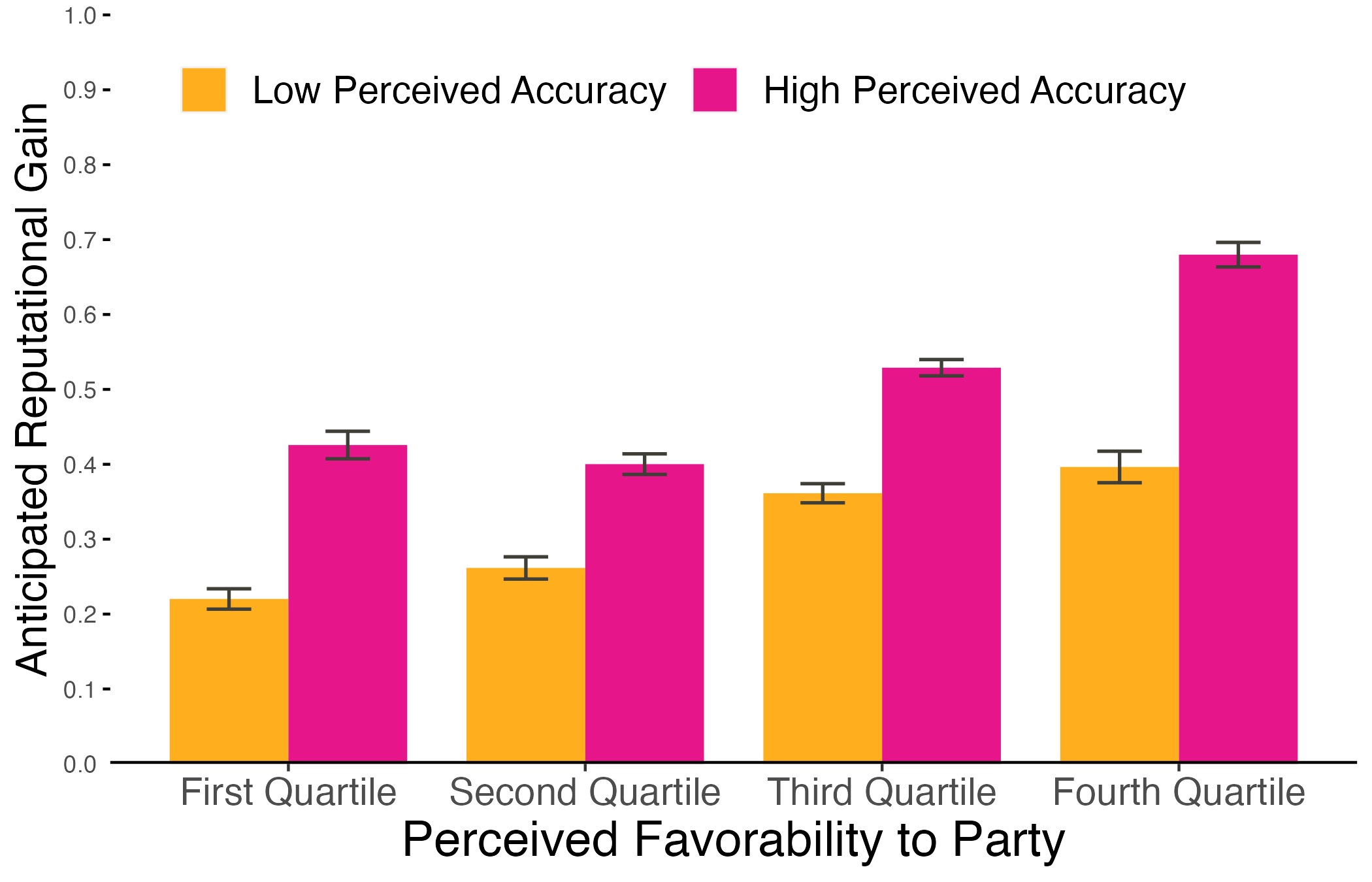

A frequently invoked explanation for the sharing of false over true political

information is that partisans are motivated by their reputations. In

particular, it is often argued that by indiscriminately sharing news that is

favorable to one's political party, regardless of whether it is true--or

perhaps especially when it is not--partisans can signal loyalty to their

group, and improve their reputations in the eyes of their online networks.

Across three survey studies (total N = 3,038), and an analysis of

over 26,000 tweets, we explored these hypotheses by measuring the reputational

benefits that people anticipate and receive from sharing different content

online. In the survey studies, we showed participants actual news headlines

that varied in (a) veracity, and (b) favorability to their preferred political

party. Across all three studies, participants anticipated that sharing true

news would bring more reputational benefits than sharing false news.

Critically, while participants also expected greater reputational benefits for

sharing news favorable to their party, the perceived reputation value of

veracity was no smaller for more favorable headlines. We found a similar

pattern when analyzing engagement on Twitter: among headlines that were

politically favorable to a user's preferred party, true headlines elicited

more approval than false headlines.

Ghezae, I., Lelkes, Y., & Cikara, M. (in prep). Who goes

with whom: The multidimensional cognitive map of U.S. political coalitions.

Abstract

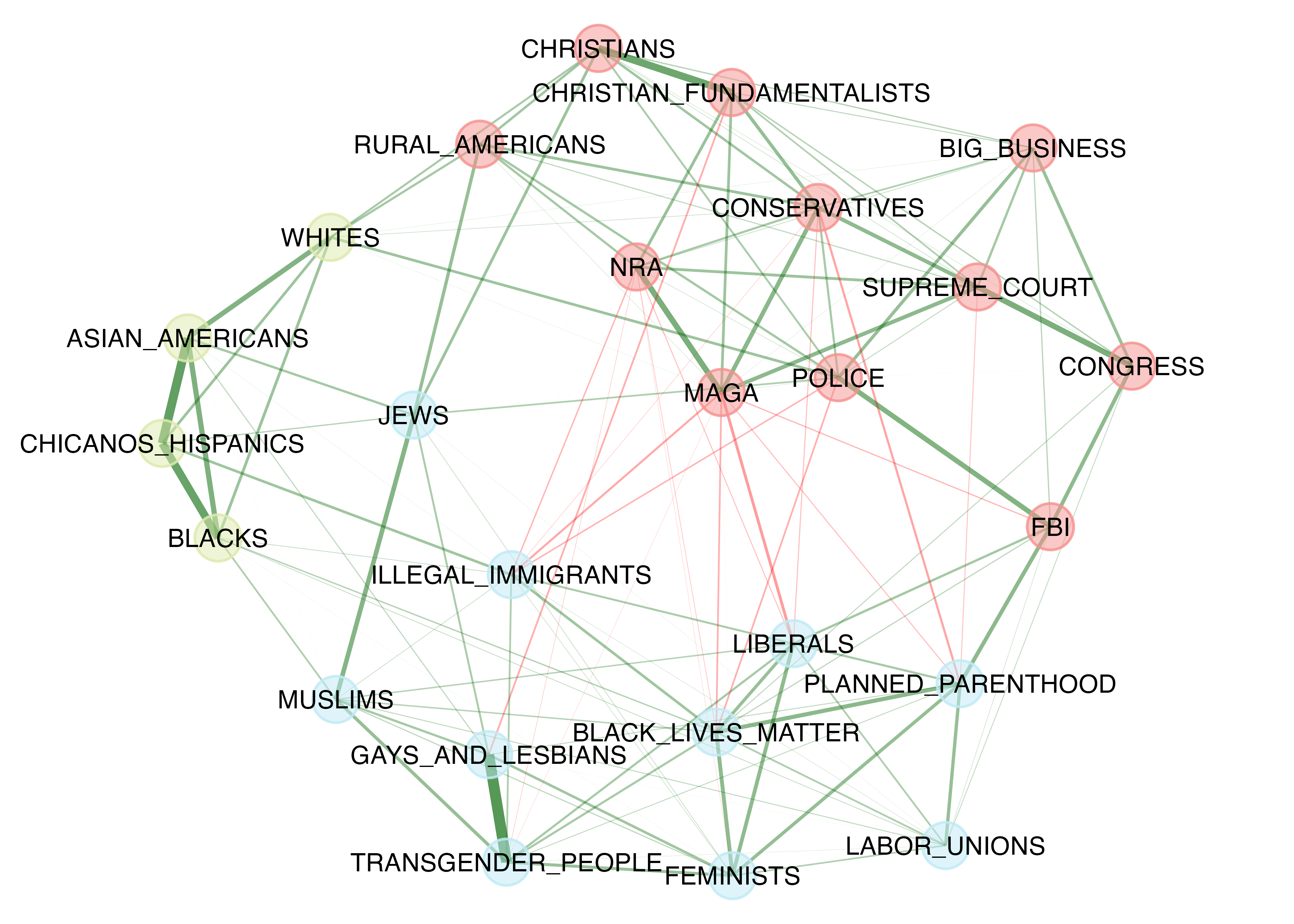

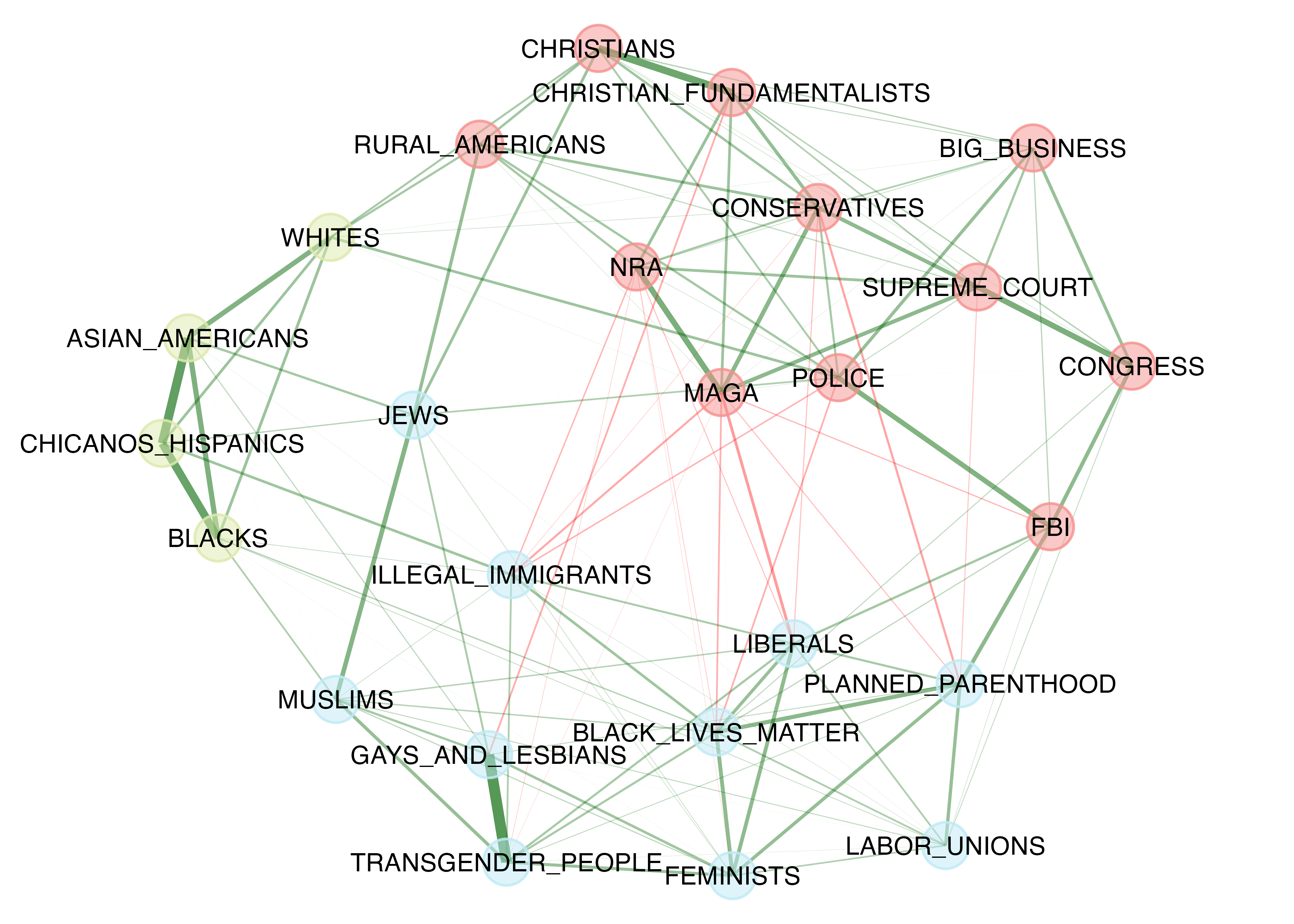

Polarization debates often presume two sorted "tribes" in American politics,

yet we lack a map of how citizens perceive the coalitions that constitute

these tribes--perceptions that structure partisan animus, guide information

processing and vote choice, and shape political behavior. Across four studies

using historical and novel survey data, we inductively discover this

cognitive map. Our analyses consistently reveal a stable, asymmetric,

tripartite structure: a unified conservative cluster alongside a durably

fractured liberal coalition composed of distinct "Ideological" and

"Demographic" wings. This structure is organized along two dimensions: a

primary ideological dimension capturing the traditional left-right conflict,

and a secondary common-fate dimension distinguishing groups perceived to be

motivated by tangible, widespread concerns from those motivated by narrower,

"special" interests. This perceptual architecture explains the entanglement

of social-group stereotypes with partisanship, reconciles rising affective

polarization with stable demographic sorting, and identifies concrete limits

on persuasion and mobilization.

Ghezae, I., Conroy-Beam, D., & Pietraszewski, D. (in prep).

Modeling the social and cognitive processes necessary to produce perceptions of

race.

Abstract

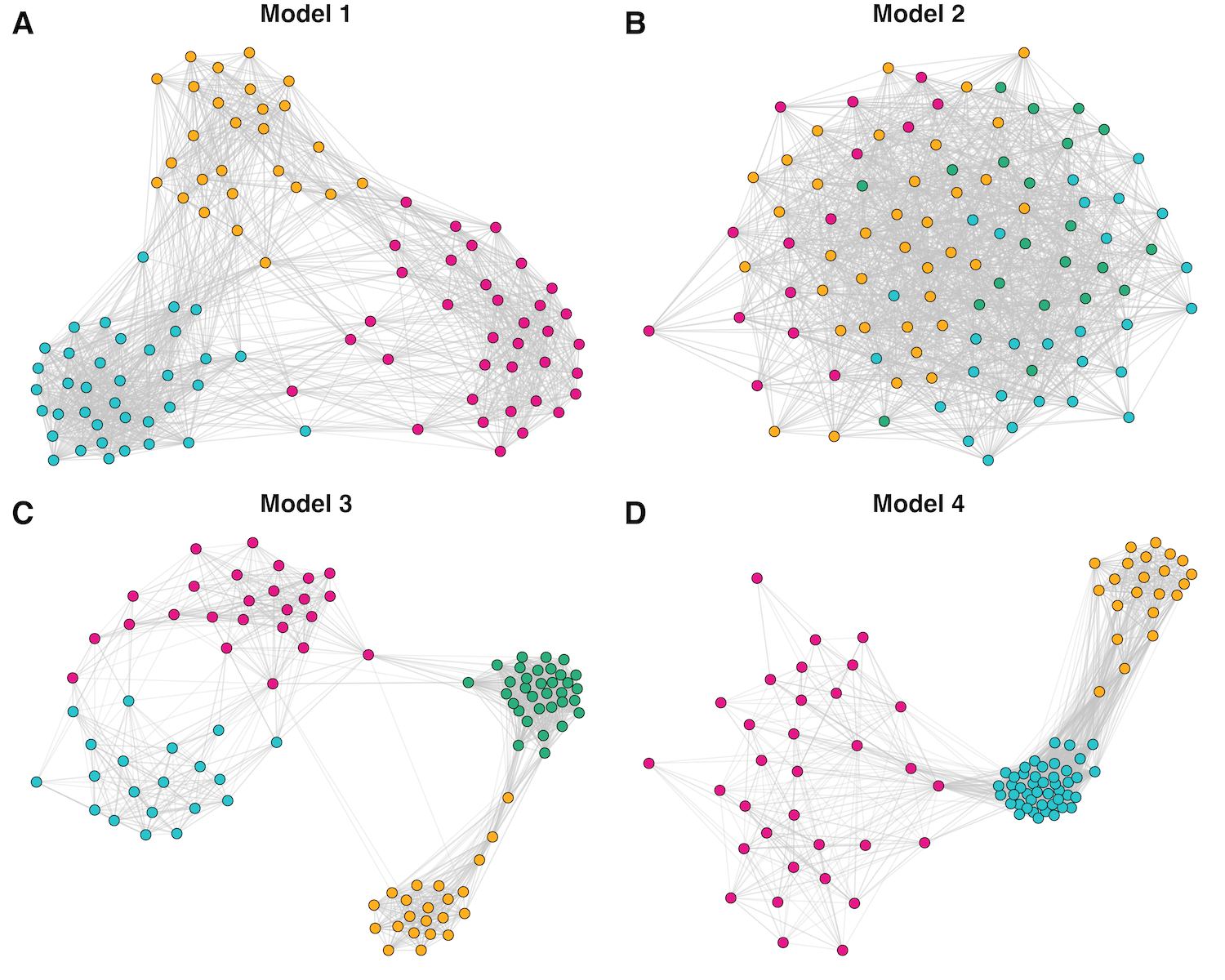

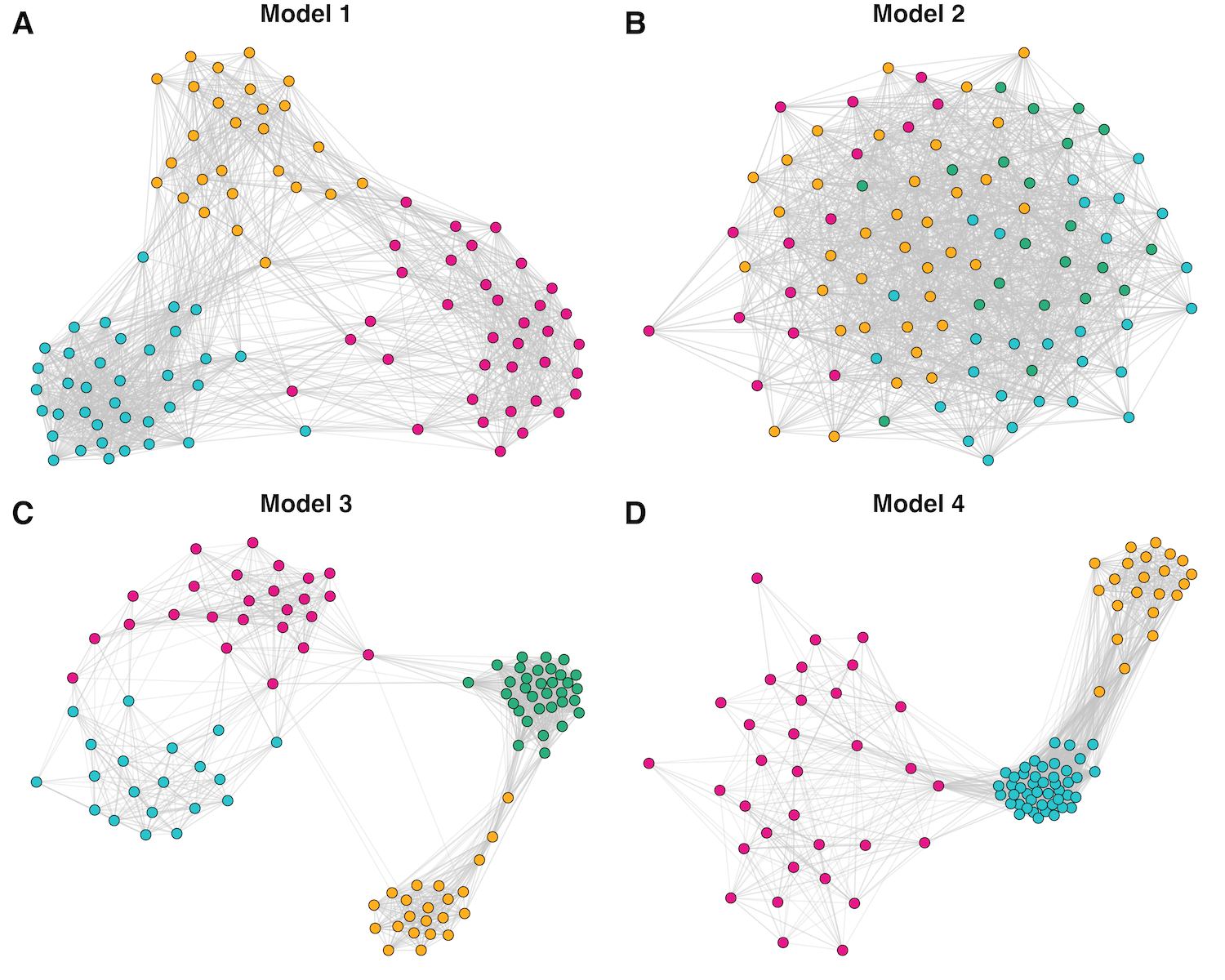

The belief that humans belong to distinct racial categories pervades our

modern world and deeply shapes our social and political life. This perception

exists despite the fact that racial categories are both evolutionarily novel

and biologically unsound. A candidate explanation for why humans categorize

others into racial groups can be found in the "alliance hypothesis of race"

which proposes that modern racial categorization is a byproduct of a

cognitive system designed for ancestral alliance detection: coalitional

psychology. Compelling evidence from memory confusion studies has supported

this hypothesis by demonstrating that redirecting coalitional psychology can

suppress racial categorization. However, the capacity for a coalitional

psychology to generate racial categories from scratch has not yet been

explored. Using agent-based models, we show that agents endowed with a

coalitional psychology that attempts to detect patterns of alliance based on

available cues can hallucinate and then reify correlations between phenotype

and allegiance, leading to the emergence of social groups that vary

systematically by phenotype. This occurs even when phenotype is in reality

distributed continuously and has no true connection to behavior. These models

provide evidence that a coalitional psychology alone can be sufficient to

create beliefs in phenotype-based social categories even when no such

categories truly exist.

Cetron, J. S.*, Ghezae, I.*, Haque, O., Mair, P., & Cikara,

M. (under review). Personal relevance of attitude importance predicts costly

intergroup behavior.

Abstract

What aspects of prejudicial attitudes toward social out-groups predict

behavior toward those groups? Social attitudes research typically links

favorability toward social out-groups to engagement in intergroup behaviors:

the stronger your (un)favorability toward a group, the more costly behaviors

you should engage in, concerning that group. But many people make

strongly-valenced favorability statements about minoritized out-groups without

engaging in corresponding costly actions. We investigate subjective attitude

importance as a competing predictor of costly intergroup behaviors. When

white respondents rate their attitudes toward minoritized racial out-groups as

more personally important to them, they are more likely to give up real money

to: (1) prevent biased attitude signaling, (2) preserve their reputations, and

(3) increase charitable donation to an out-group-supporting nonprofit.

Finally, we find that attitude measurements that centered personal relevance

better explained variance in costly behavior than generic favorability or

importance measurements. These results indicate that measuring subjective

attitude importance, with emphasis on the personal relevance of the attitude,

improves prediction of supportive and discriminatory behaviors toward

minoritized racial out-groups.

Voelkel, J. G.*, Stagnaro, M. N.*, Chu, J.*, Pink, S. L., Mernyk, J. S.,

Redekopp, C., Ghezae, I., Cashman, M., Strengthening Democracy

Challenge Finalists, Druckman, J., Rand, D. G., & Willer, R. (2024). Megastudy

testing 25 treatments to reduce anti-democratic attitudes and partisan

animosity. Science.

Abstract

Scholars warn that partisan divisions in the mass public threaten the health

of American democracy. We conducted a megastudy (n = 32,059 participants)

testing 25 treatments designed by academics and practitioners to reduce

Americans' partisan animosity and anti-democratic attitudes. We find many

treatments reduced partisan animosity, most strongly by highlighting relatable

sympathetic individuals with different political beliefs, or by emphasizing

common identities shared by rival partisans. We also identify several

treatments that reduced support for undemocratic practices--most strongly by

correcting misperceptions of rival partisans' views, or highlighting the

threat of democratic collapse--showing anti-democratic attitudes are not

intractable. Taken together, the study's findings identify promising general

strategies for reducing partisan division and improving democratic attitudes,

shedding new theoretical light on challenges facing American democracy.

Landry, A. P., Ghezae, I., Abou-Ismail, R., Spooner, S.,

August, R. J., Mair, C., Ragnhildstveit, A., Van den Noortgate, W., Gelfand, M.

J., & Seli, P. (2025). The uniquely powerful impact of explicit, blatant

dehumanization on support for intergroup violence.

Journal of Personality and Social Psychology.

Abstract

To effectively address intergroup violence, we must accurately diagnose the

psychological motives driving it. Dehumanization--the explicit and

blatant denial of an outgroup's humanity--is widely considered one such

driver, informing both scholarly theory and social policy on intergroup

violence. Nonetheless, dehumanization is often intertwined with intense

negative affect, raising concerns that dehumanization's explanatory power is

much more restricted than widely assumed. In the extreme, "dehumanization" is

merely another way to express intense dislike. If so, then theories

of dehumanization distort our understanding of the true motives driving

violence. Here, we test dehumanization's reality and explanatory power through

three complementary research streams that employ diverse methods and samples.

First, we meta-analyze existing studies on dehumanization and dislike to

establish their independent effects on violence (k = 120;

N = 128,022). We then test the generalizability of these effects

across four violent conflicts in the United States, Russia and Ukraine, Israel

and the Palestinian diaspora, and India (N Total = 3,773). In these

studies, we also test whether individuals' dehumanizing responses are mere

metaphor or intended literally. Finally, we isolate dehumanization's causal

impact on violence in another US sample (N = 753). Our results

converge to demonstrate that dehumanization is (a) distinct from dislike and

often intended literally, (b) a particularly strong predictor of support for

violence, and (c) can causally facilitate such support. Collectively, these

studies clarify our understanding of the psychology driving violence and can

inform efforts to address it.

Ashokkumar, A.*, Hewitt, L.*, Ghezae, I., & Willer, R.

(invited revision). Predicting results of social science experiments using large

language models.

Coverage:

Stanford Institute for Human-Centered AI

Abstract

To evaluate whether large language models (LLMs) can be leveraged to predict

the results of social science experiments, we built an archive of 70

pre-registered, nationally representative, survey experiments conducted in

the United States, involving 476 experimental treatment effects and 105,165

participants. We prompted an advanced, publicly-available LLM (GPT-4) to

simulate how representative samples of Americans would respond to the stimuli

from these experiments. Predictions derived from simulated responses correlate

strikingly with actual treatment effects (r = 0.85), equaling or surpassing

the predictive accuracy of human forecasters. Accuracy remained high for

unpublished studies that could not appear in the model's training data (r =

0.90). We further assessed predictive accuracy across demographic subgroups,

various disciplines, and in nine recent megastudies featuring an additional

346 treatment effects. Together, our results suggest LLMs can augment

experimental methods in science and practice, but also highlight important

limitations and risks of misuse.

Open-Source Software

Ghezae, I. (2023).

Unique Turker 2.

[Full-stack Flask app with a built-in database that can be used by Mechanical Turk

requesters to prevent duplicate HIT access from Mechanical Turk workers]

Science Communication

Ghezae, I. (2020, October 1).

The Social Media Outrage Machine: How Our Digital Worlds Distort Political

Discourse and Why This Matters

.

[Opinion piece written for The Santa Barbara Independent]